Abstract

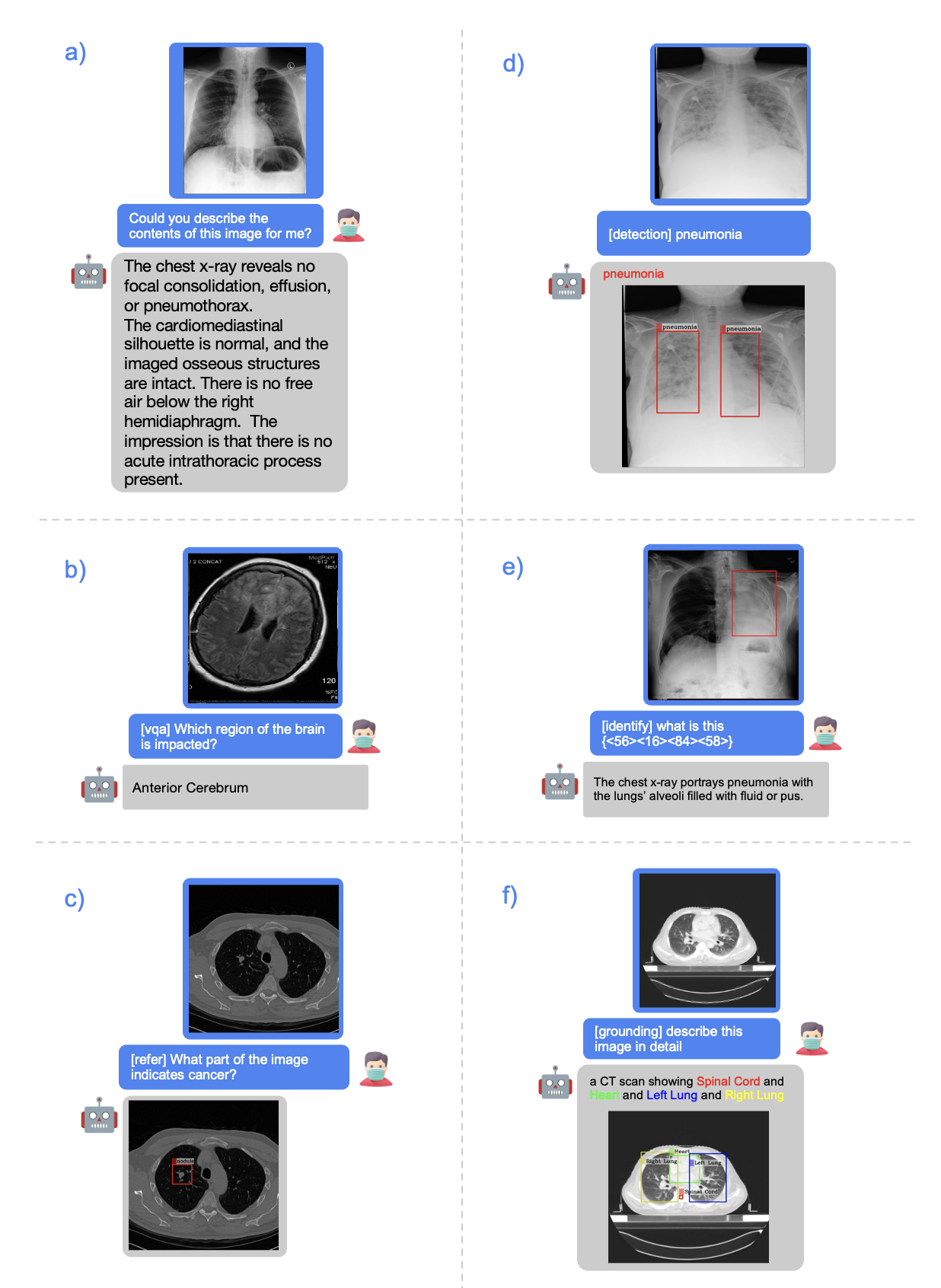

Recent advancements in artificial intelligence (AI) have precipitated significant breakthroughs in healthcare, particularly in refining diagnostic procedures. However, previous studies have often been constrained to limited functionalities. This study introduces MiniGPT-Med, a vision-language model derived from large-scale language models and tailored for medical applications. MiniGPT-Med demonstrates remarkable versatility across various imaging modalities, including X-rays, CT scans, and MRIs, enhancing its utility. The model is capable of performing tasks such as medical report generation, visual question answering (VQA), and disease identification within medical imagery. Its integrated processing of both image and textual clinical data markedly improves diagnostic accuracy. Our empirical assessments confirm MiniGPT-Med's superior performance in disease grounding, medical report generation, and VQA benchmarks, representing a significant step towards reducing the gap in assisting radiology practice. Furthermore, it achieves state-of-the-art performance on medical report generation, higher than the previous best model by 19\% accuracy. MiniGPT-Med promises to become a general interface for radiology diagnoses, enhancing diagnostic efficiency across a wide range of medical imaging applications.

Model

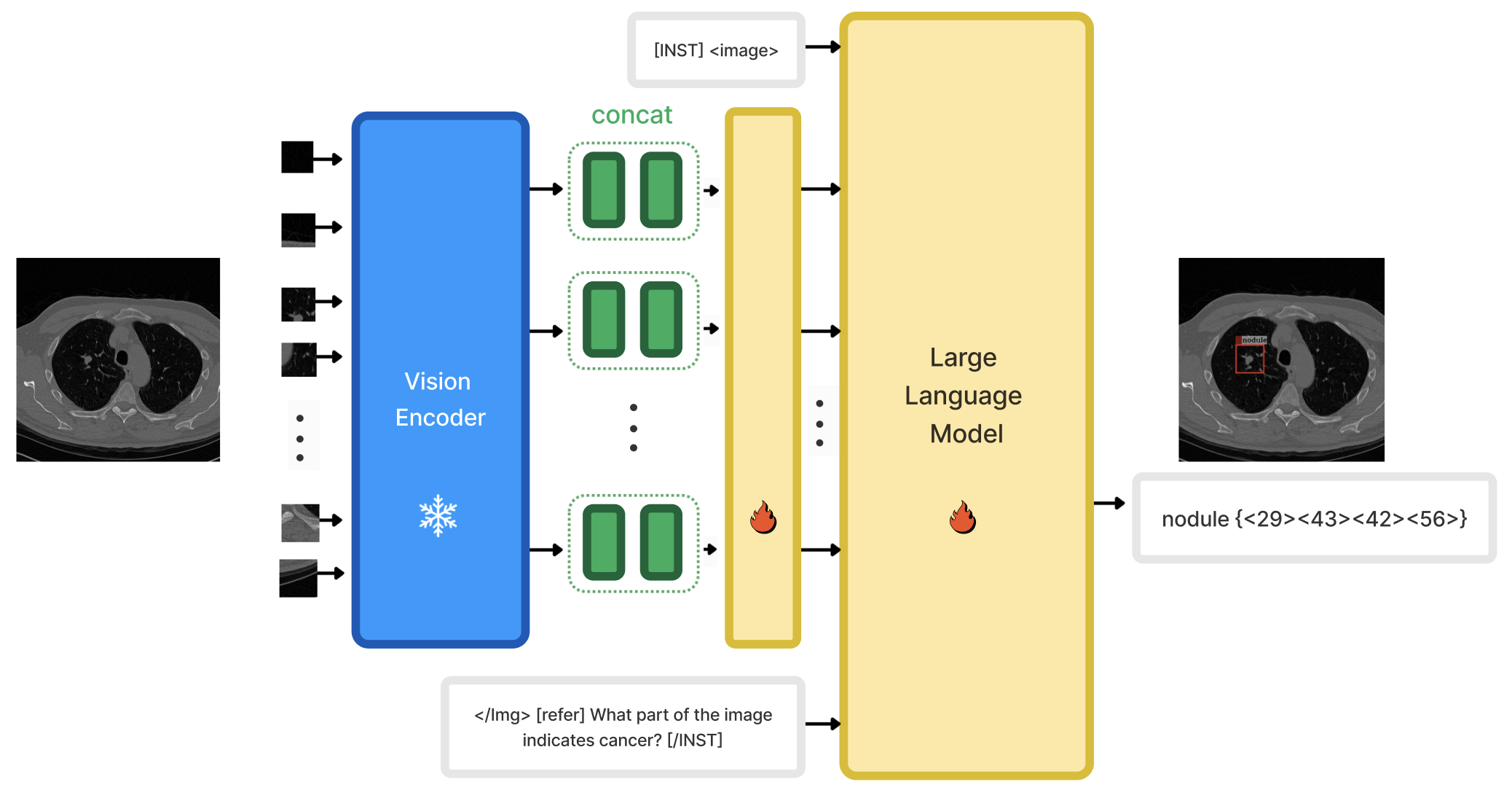

MiniGPT-Med architecture comprises a vision encoder, a linear projection layer, and a large language model. It processes a single medical image, transforming it into visual semantic features via a pretrained vision en- coder. These features are concatenated into a single visual token. A linear projection layer then maps these visual tokens into the large language model’s space. Through- out the training process, we maintain the vision encoder’s parameters constant while fine-tuning the large language model and linear projection layer. :

The architecture of MiniGPT-Med.